Mo-Sys Cinematic XR Focus allows focus pullers to seamlessly pull focus from real objects, through the LED wall, to focus on virtual objects that appear to be positioned behind the LED wall, using the same wireless lens control system they already use today. A reverse focus pull is equally possible.

Enhancing Final Pixel Scene Interaction

Cost advantages and talent lighting benefits make final pixel LED volume shoots highly attractive now. LED volumes have predominantly been used as scene backdrops rather than part of an interactive studio but Cinematic XR enables dynamic interaction between real and virtual LED volume elements, elevating the realism of the shots.

Pull focus between the real and virtual

Transforming virtual production, Cinematic XR Focus software, will enhance final pixel LED volume cinematography by enabling DoPs to pull focus between real foreground objects, like actors, and virtual LED displayed objects, like cars. This is something that was previously only achievable in a green/blue screen shoots with downstream post-production compositing.

Unleash your storytelling possibilities today

Mo-Sys Cinematic XR Focus uniquely empowers virtual production filmmakers to shoot more creative shots and more ambitious setups, saving them valuable time and enhancing their storytelling. Utilising more of the creative cinematic toolset that is traditionally available to regular film crews, cinematographers now have the freedom with Cinematic XR Focus.

Works with VP Pro and StarTracker

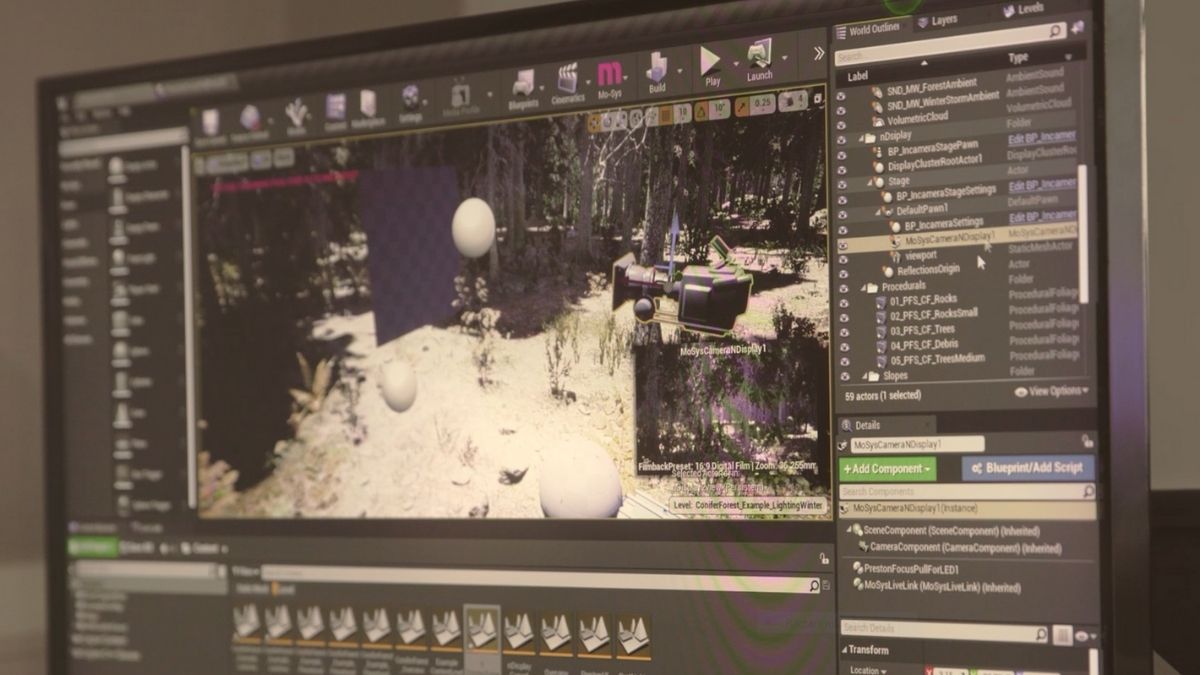

Mo-Sys Cinematic XR Focus synchronizes the lens controller with the Unreal Engine graphics, using Star Tracker to constantly track the distance between the camera and the LED wall. The software manages the transition between the lens controller driving the physical lens focus pull and Unreal Engine driving the virtual focus pull.

Products Features |

|||||

|---|---|---|---|---|---|

|

Mo-Sys tracking data over LiveLink

Supports StarTracker Classic, StarTracker Max, encoded heads and handwheels, and extends to all future robotics and tracking hardware.

|

|

|

|

|

|

|

nDisplay for LED volumes

Receive tracking and lens data in nDisplay.

|

|

|

|

|

|

|

Live video I/O

Automatic configuration of compositing pipeline.

|

|

|

|

|

|

|

Drag-and-drop virtual camera

Pre-fabricated camera for live compositing.

|

|

|

|

|

|

|

Lens distortion

Entirely automated distortion and depth of field for calibrated fixed and zoom lenses.

|

|

|

|

|

|

|

AR

Particle effects, glow, depth of field and shadows and reflections with Epic's latest compositing.

|

|

|

|

|

|

|

StarTracker control

Detect and configure StarTrackers directly from Unreal.

|

|

|

|

|

|

|

Mo-Sys data transmitter

Control Mo-Sys robotics from Unreal.

|

|

|

|

|

|

|

System status monitor

See at a glance the state of the VP system.

|

|

|

|

|

|

|

Mo-Sys keyer

Enhanced internal chromakeyer

|

|

|

|

|

|

|

External keyer integration

'Pre-Keyer' and 'Post-Keyer' modes of operation enabling compositing in-engine or in-keyer

|

|

|

|

|

|

|

Garbage mattes

3D mattes for a 360 virtual environment beyond the greenscreen.

|

|

|

|

|

|

|

VP layouts

Custom editor layouts for different production situations.

|

|

|

|

|

|

|

Animation triggers

Interface for triggering Level Sequence animations on keypress on multiple engines.

|

|

|

|

|

|

|

Multi-engine interface

Low-level fast customisable interface for multi-camera/engine

|

|

|

|

|

|

|

Stage manager

Easily move the physical 'stage' around the virtual space.

|

|

|

|

|

|

|

Data recording with timecode

Synchronous multi-source tracking and lens data recording.

|

|

|

|

|

|

|

StarTracker internal recording

Automatic high frame-rate recording in StarTracker

|

|

|

|

|

|

|

VFX sample bundle

Project/media/data bundle that demos how to use the recorded data in Maya, Houdini and Nuke.

|

|

|

|

|

|

|

FBX data export

Virtual camera and lens distortion export to traditional pipelines.

|

|

|

|

|

|

|

Take management

Metadata tools for managing, modifying and exporting takes.

|

|

|

|

|

|

|

External SDI recorder integration

Fire record on Blackmagic Hyperdeck recorders automatically with timecode and metadata.

|

|

|

|

|

|

|

Arri, Sony Venice and RED camera interfaces

Gather metadata, and trigger recording from multiple cameras..

|

|

|

|

|

|

|

Studio mode

Multi-camera support for StarTracker Studio.

|

|

|

|

|

|

|

Hardware keyer integration

Switch presets on Blackmagic Ultimatte from Unreal blueprints.

|

|

|

|

|

|

|

Switcher integration

Cut cameras on Blackmagic / Sony XVS / Grassvalley switchers.

|

|

|

|

|

|

|

Cinematic XR focus

Automatic motor control to enable pulling focus from the real into the virtual world

|

|

|

|

|

|

|

LED Multicam

Switched and interleaved modes of multi-camera

|

|

|

|

|

|

|

LED volume set extensions

Configure XR extensions to your LED wall with feathering.

|

|

|

|

|

|

|

Automated XR geometry correction

Store and read geometry corrections for multiple cameras

|

|

|

|

|

|

|

Dynamic XR colour correction

Apply different colour corrections based on camera position

|

|

|

|

|

|

|

LED test patterns

Test patterns for aligning XR and LED volume

|

|

|

|

|

|

|

Support and maintenance

Comprehensive support and maintenance, including regular updates with new features as they are developed.

|

|

|

|

|

|

Make an Enquiry

We are committed to providing unrivalled support and service, ensuring we maintain an ongoing relationship with our clients that keeps us fully engaged. For more information about how we can help your project, please contact us using the details below.

Call Us Email Us Send Us A MessageUse the contact form to let us know you’re interested in our products and someone from our helpful team will get back to you.